30-12-2024

11:35

GNOME’s New Image Viewer Adds Image Editing Features [OMG! Ubuntu!]

Loupe (aka Image Viewer) is GNOME’s modern successor to the venerable Eye of GNOME has picked up its first batch of image editing features. The features in question were only recently merged upstream, aren’t finished, and not yet included in a stable build. But they’re an interesting addition that furthers the likelihood that Loupe could become the default image viewer on Ubuntu. At present, Ubuntu continues to use Eye of GNOME as the default tool for opening and browsing image files on desktop, despite Loupe having officially replaced it upstream in the GNOME project as a GNOME Core app. Loupe […]

Loupe (aka Image Viewer) is GNOME’s modern successor to the venerable Eye of GNOME has picked up its first batch of image editing features. The features in question were only recently merged upstream, aren’t finished, and not yet included in a stable build. But they’re an interesting addition that furthers the likelihood that Loupe could become the default image viewer on Ubuntu. At present, Ubuntu continues to use Eye of GNOME as the default tool for opening and browsing image files on desktop, despite Loupe having officially replaced it upstream in the GNOME project as a GNOME Core app. Loupe […]

You're reading GNOME’s New Image Viewer Adds Image Editing Features, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

Ghostty: New Open Source Terminal That’s Spookily Good [OMG! Ubuntu!]

We’re seeing something of a terminal emulator renaissance of late, with developers eager to reimagine, rethink, and rewire the humble console to leverage modern hardware, GPU acceleration, containerised workflows, and (in some cases) AI/LLMs. Ghostty, a new open-source and cross-platform terminal application created by Mitchell Hashimoto (co-founder of HashiCorp) is the latest to join the fray. Hashimoto’s says he “set out to build a terminal emulator that was fast, feature-rich, and had a platform-native GUI while still being cross-platform.” The first public release materialised over Christmas, rather like a festive Dickensian spook seeking to give us a glimpse into the […]

We’re seeing something of a terminal emulator renaissance of late, with developers eager to reimagine, rethink, and rewire the humble console to leverage modern hardware, GPU acceleration, containerised workflows, and (in some cases) AI/LLMs. Ghostty, a new open-source and cross-platform terminal application created by Mitchell Hashimoto (co-founder of HashiCorp) is the latest to join the fray. Hashimoto’s says he “set out to build a terminal emulator that was fast, feature-rich, and had a platform-native GUI while still being cross-platform.” The first public release materialised over Christmas, rather like a festive Dickensian spook seeking to give us a glimpse into the […]

You're reading Ghostty: New Open Source Terminal That’s Spookily Good, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

See Pinned Ubuntu Dock Apps in the Application Grid [OMG! Ubuntu!]

You may have noticed (or not) that if an app is pinned to the Ubuntu Dock you don’t see a shortcut for it in the applications grid. This approach is by design to avoid duplication since the dock is always visible (by default) so those app shortcuts are always in reach – each app shortcut only shows once. Not everyone likes this behaviour, especially if Ubuntu Dock auto-hide is enabled. Naturally, there are 3rd-party GNOME Shell extensions one can install to make sure all apps show in the main applications grid irrespective of whether they’re pinned to Ubuntu Dock (or […]

You may have noticed (or not) that if an app is pinned to the Ubuntu Dock you don’t see a shortcut for it in the applications grid. This approach is by design to avoid duplication since the dock is always visible (by default) so those app shortcuts are always in reach – each app shortcut only shows once. Not everyone likes this behaviour, especially if Ubuntu Dock auto-hide is enabled. Naturally, there are 3rd-party GNOME Shell extensions one can install to make sure all apps show in the main applications grid irrespective of whether they’re pinned to Ubuntu Dock (or […]

You're reading See Pinned Ubuntu Dock Apps in the Application Grid, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

How to Hide Ubuntu Pro Updates in Ubuntu 24.04 LTS [OMG! Ubuntu!]

Ubuntu Pro is an optional security feature that Ubuntu LTS users can enable to receive critical updates for an extra 25,000 packages which would otherwise sit unpatched. If you use Ubuntu 24.04 LTS you will have seen Ubuntu Pro security updates in Software Updater (or when running apt commands). And you will have noticed you can’t install those updates without having an Ubuntu Pro subscription. Ubuntu Pro is free for all users on up to 5 machines, meaning the only ‘cost’ involved is the time it takes to register and setup Ubuntu Pro. Businesses (or home users) with fleets of […]

Ubuntu Pro is an optional security feature that Ubuntu LTS users can enable to receive critical updates for an extra 25,000 packages which would otherwise sit unpatched. If you use Ubuntu 24.04 LTS you will have seen Ubuntu Pro security updates in Software Updater (or when running apt commands). And you will have noticed you can’t install those updates without having an Ubuntu Pro subscription. Ubuntu Pro is free for all users on up to 5 machines, meaning the only ‘cost’ involved is the time it takes to register and setup Ubuntu Pro. Businesses (or home users) with fleets of […]

You're reading How to Hide Ubuntu Pro Updates in Ubuntu 24.04 LTS, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

OpenShot Video Editor Puts Out an Effortless, Seamless, Etc Update [OMG! Ubuntu!]

A new version of OpenShot video editor is out (a video editor which doesn’t have the best reputation for stability hence the nickname OpenShut). OpenShot—more accurately, ChatGPT or similar—says “OpenShot 3.3 is here to transform your editing experience! This release is as powerful as it is beautiful […] Take your video editing to the next level with OpenShot 3.3. Download it now and see the difference”. The headline change in OpenShot 3.3 is the use of a new default theme called Cosmic Dust. This apparently offers a “modern editing experience”. The new theme looks nice, it I’m not sure it […]

A new version of OpenShot video editor is out (a video editor which doesn’t have the best reputation for stability hence the nickname OpenShut). OpenShot—more accurately, ChatGPT or similar—says “OpenShot 3.3 is here to transform your editing experience! This release is as powerful as it is beautiful […] Take your video editing to the next level with OpenShot 3.3. Download it now and see the difference”. The headline change in OpenShot 3.3 is the use of a new default theme called Cosmic Dust. This apparently offers a “modern editing experience”. The new theme looks nice, it I’m not sure it […]

You're reading OpenShot Video Editor Puts Out an Effortless, Seamless, Etc Update, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

Spyder IDE: Spyder 6 project lead: Remote development interface and application UI/UX improvements [Planet Python]

Spyder's lead maintainer, Carlos Cordoba, shares his insights on the projects and features he helped develop for Spyder 6.0, particularly UI/UX and where the IDE is headed next.

29-12-2024

20:38

How to Append a Line After a String in a File Using sed Command [Linux Today]

If you’re working with text files on a Linux system, you may need to modify the contents of those files. One useful tool for this task is sed, which stands for stream editor, which allows you to perform various text manipulations, such as replacing text, deleting lines, and adding new lines.

In this article, we’ll show you how to use sed to add a new line after a specific string in a file.

The post How to Append a Line After a String in a File Using sed Command appeared first on Linux Today.

12:25

How to Set Up an AI Development Environment on Ubuntu [Linux Today]

Artificial Intelligence (AI) is one of the most exciting and rapidly evolving fields in technology today. With AI, machines are able to perform tasks that once required human intelligence, such as image recognition, natural language processing, and decision-making.

If you’re a beginner and want to dive into AI development, Linux is an excellent choice of operating system, as it is powerful, flexible, and widely used in the AI community.

In this guide, we’ll walk you through the process of setting up an AI development environment on your Ubuntu system.

The post How to Set Up an AI Development Environment on Ubuntu appeared first on Linux Today.

28-12-2024

20:45

Bah Hum-bugfix – it’s the Christmas Update to Calibre! [OMG! Ubuntu!]

In deep mid-winter nothing beats curling up with a good book, in-front of a roaring fire – the crackle of all the unwanted Christmas tat your nearest and dearest bought you chars, melts, and burns providing a warm aural soundtrack. Thankfully, not everyone’s feeling as seasonably irascible as I am – like the folks behind open-source ebook reader, manager, and converter Calibre. They’ve hand-wrapped a bug-fix update to help tide us over the festive season. Hurrah! As gifts that arrive in late December go, Calibre 7.23 is a modest one: more ‘last-minute box of chocs’ than something you really wanted […]

In deep mid-winter nothing beats curling up with a good book, in-front of a roaring fire – the crackle of all the unwanted Christmas tat your nearest and dearest bought you chars, melts, and burns providing a warm aural soundtrack. Thankfully, not everyone’s feeling as seasonably irascible as I am – like the folks behind open-source ebook reader, manager, and converter Calibre. They’ve hand-wrapped a bug-fix update to help tide us over the festive season. Hurrah! As gifts that arrive in late December go, Calibre 7.23 is a modest one: more ‘last-minute box of chocs’ than something you really wanted […]

You're reading Bah Hum-bugfix – it’s the Christmas Update to Calibre!, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

How to Use sed for Dynamic Number Replacement in Linux [Linux Today]

The sed command, short for Stream Editor, is a powerful text processing tool in Linux, which is widely used for text manipulation tasks, including searching, finding and replacing text, and even performing advanced scripting.

This article will guide you through the basics of sed, explain how to use it for dynamic number replacement, and provide practical examples for beginners.

The post How to Use sed for Dynamic Number Replacement in Linux appeared first on Linux Today.

Ghostty 1.0 Released, A New GPU-Accelerated Terminal Emulator [Linux Today]

Meet Ghostty 1.0: The GPU-accelerated terminal emulator exits beta after two years, challenging Kitty and Alacritty with its speed and features.

The post Ghostty 1.0 Released, A New GPU-Accelerated Terminal Emulator appeared first on Linux Today.

An Introduction To Wiki.js, a Modern, Lightweight and Powerful Wiki Software Built on NodeJS [Linux Today]

Wiki.js is a powerful and extensible open source wiki software to create the perfect wiki and knowledge base documentation.

The post An Introduction To Wiki.js, a Modern, Lightweight and Powerful Wiki Software Built on NodeJS appeared first on Linux Today.

12 Best Free and Open Source TypeScript-Based Web Content Management Systems [Linux Today]

Here are our favorite TypeScript-based web content management systems. They are all free and open source software.

The post 12 Best Free and Open Source TypeScript-Based Web Content Management Systems appeared first on Linux Today.

How to Install FreshRSS with Docker: A Step-by-Step Guide [Linux Today]

Learn how to install FreshRSS, a self-hosted RSS feed aggregator, with Docker in just a few easy steps and centralize your news feed experience.

The post How to Install FreshRSS with Docker: A Step-by-Step Guide appeared first on Linux Today.

StackLok Is Making Security ‘Boring’ for Open-Source AI-Assisted Devs [Linux Today]

Stacklok’s new CodeGate software removes many of the pain points and security traps for those developing using AI tools.

The post StackLok Is Making Security ‘Boring’ for Open-Source AI-Assisted Devs appeared first on Linux Today.

Zero to Mastery: Python Monthly Newsletter 💻🐍 [Planet Python]

61st issue of Andrei Neagoie's must-read monthly Python Newsletter: Octoverse Results Reveal, GPU Computing, and much more. Read the full newsletter to get up-to-date with everything you need to know from last month.

TestDriven.io: Deploying a Django App to AWS ECS with AWS Copilot [Planet Python]

This tutorial looks at how to deploy a Django app to AWS ECS with AWS Copilot.

Daniel Roy Greenfeld: TIL: yield from [Planet Python]

A variant of the yield statement that can result in more concise code.

Kushal Das: pastewindow.nvim my first neovim plugin [Planet Python]

pastewindow is a neovim plugin written in Lua to help to paste text from a buffer to a different window in Neovim. This is my first attempt of writing a plugin.

We can select a window (in the GIF below I am using a bash terminal as target) and send any text to that window. This will be helpful in my teaching sessions. Specially modifying larger Python functions etc.

I am yet to go through all the Advent of Neovim videos from TJ DeVries. I am hoping to improve (and more features) to the plugin after I learn about plugin development from the videos.

Talk Python to Me: #491: DuckDB and Python: Ducks and Snakes living together [Planet Python]

Join me for an insightful conversation with Alex Monahan, who works on documentation, tutorials, and training at DuckDB Labs. We explore why DuckDB is gaining momentum among Python and data enthusiasts, from its in-process database design to its blazingly fast, columnar architecture. We also dive into indexing strategies, concurrency considerations, and the fascinating way MotherDuck (the cloud companion to DuckDB) handles large-scale data seamlessly. Don’t miss this chance to learn how a single pip install could totally transform your Python data workflow!<br/> <br/> <strong>Episode sponsors</strong><br/> <br/> <a href='https://talkpython.fm/sentry'>Sentry Error Monitoring, Code TALKPYTHON</a><br> <a href='https://talkpython.fm/citizens'>Data Citizens Podcast</a><br> <a href='https://talkpython.fm/training'>Talk Python Courses</a><br/> <br/> <h2>Links from the show</h2> <div><strong>Alex on Mastodon</strong>: <a href="https://data-folks.masto.host/@__Alex__?featured_on=talkpython" target="_blank" >@__Alex__</a><br/> <br/> <strong>DuckDB</strong>: <a href="https://duckdb.org/?featured_on=talkpython" target="_blank" >duckdb.org</a><br/> <strong>MotherDuck</strong>: <a href="https://motherduck.com/?featured_on=talkpython" target="_blank" >motherduck.com</a><br/> <strong>SQLite</strong>: <a href="https://sqlite.org/?featured_on=talkpython" target="_blank" >sqlite.org</a><br/> <strong>Moka-Py</strong>: <a href="https://github.com/deliro/moka-py?featured_on=talkpython" target="_blank" >github.com</a><br/> <strong>PostgreSQL</strong>: <a href="https://www.postgresql.org/?featured_on=talkpython" target="_blank" >www.postgresql.org</a><br/> <strong>MySQL</strong>: <a href="https://www.mysql.com/?featured_on=talkpython" target="_blank" >www.mysql.com</a><br/> <strong>Redis</strong>: <a href="https://redis.io/?featured_on=talkpython" target="_blank" >redis.io</a><br/> <strong>Apache Parquet</strong>: <a href="https://parquet.apache.org/?featured_on=talkpython" target="_blank" >parquet.apache.org</a><br/> <strong>Apache Arrow</strong>: <a href="https://arrow.apache.org/?featured_on=talkpython" target="_blank" >arrow.apache.org</a><br/> <strong>Pandas</strong>: <a href="https://pandas.pydata.org/?featured_on=talkpython" target="_blank" >pandas.pydata.org</a><br/> <strong>Polars</strong>: <a href="https://pola.rs/?featured_on=talkpython" target="_blank" >pola.rs</a><br/> <strong>Pyodide</strong>: <a href="https://pyodide.org/?featured_on=talkpython" target="_blank" >pyodide.org</a><br/> <strong>DB-API (PEP 249)</strong>: <a href="https://peps.python.org/pep-0249/?featured_on=talkpython" target="_blank" >peps.python.org/pep-0249</a><br/> <strong>Flask</strong>: <a href="https://flask.palletsprojects.com/?featured_on=talkpython" target="_blank" >flask.palletsprojects.com</a><br/> <strong>Gunicorn</strong>: <a href="https://gunicorn.org/?featured_on=talkpython" target="_blank" >gunicorn.org</a><br/> <strong>MinIO</strong>: <a href="https://min.io/?featured_on=talkpython" target="_blank" >min.io</a><br/> <strong>Amazon S3</strong>: <a href="https://aws.amazon.com/s3/?featured_on=talkpython" target="_blank" >aws.amazon.com/s3</a><br/> <strong>Azure Blob Storage</strong>: <a href="https://azure.microsoft.com/products/storage/?featured_on=talkpython" target="_blank" >azure.microsoft.com/products/storage</a><br/> <strong>Google Cloud Storage</strong>: <a href="https://cloud.google.com/storage?featured_on=talkpython" target="_blank" >cloud.google.com/storage</a><br/> <strong>DigitalOcean</strong>: <a href="https://www.digitalocean.com/?featured_on=talkpython" target="_blank" >www.digitalocean.com</a><br/> <strong>Linode</strong>: <a href="https://www.linode.com/?featured_on=talkpython" target="_blank" >www.linode.com</a><br/> <strong>Hetzner</strong>: <a href="https://www.hetzner.com/?featured_on=talkpython" target="_blank" >www.hetzner.com</a><br/> <strong>BigQuery</strong>: <a href="https://cloud.google.com/bigquery?featured_on=talkpython" target="_blank" >cloud.google.com/bigquery</a><br/> <strong>DBT (Data Build Tool)</strong>: <a href="https://docs.getdbt.com/?featured_on=talkpython" target="_blank" >docs.getdbt.com</a><br/> <strong>Mode</strong>: <a href="https://mode.com/?featured_on=talkpython" target="_blank" >mode.com</a><br/> <strong>Hex</strong>: <a href="https://hex.tech/?featured_on=talkpython" target="_blank" >hex.tech</a><br/> <strong>Python</strong>: <a href="https://www.python.org/?featured_on=talkpython" target="_blank" >www.python.org</a><br/> <strong>Node.js</strong>: <a href="https://nodejs.org/?featured_on=talkpython" target="_blank" >nodejs.org</a><br/> <strong>Rust</strong>: <a href="https://www.rust-lang.org/?featured_on=talkpython" target="_blank" >www.rust-lang.org</a><br/> <strong>Go</strong>: <a href="https://go.dev/?featured_on=talkpython" target="_blank" >go.dev</a><br/> <strong>.NET</strong>: <a href="https://dotnet.microsoft.com/?featured_on=talkpython" target="_blank" >dotnet.microsoft.com</a><br/> <strong>Watch this episode on YouTube</strong>: <a href="https://www.youtube.com/watch?v=3wGeadcKens" target="_blank" >youtube.com</a><br/> <strong>Episode transcripts</strong>: <a href="https://talkpython.fm/episodes/transcript/491/duckdb-and-python-ducks-and-snakes-living-together" target="_blank" >talkpython.fm</a><br/> <br/> <strong>--- Stay in touch with us ---</strong><br/> <strong>Subscribe to Talk Python on YouTube</strong>: <a href="https://talkpython.fm/youtube" target="_blank" >youtube.com</a><br/> <strong>Talk Python on Bluesky</strong>: <a href="https://bsky.app/profile/talkpython.fm" target="_blank" >@talkpython.fm at bsky.app</a><br/> <strong>Talk Python on Mastodon</strong>: <a href="https://fosstodon.org/web/@talkpython" target="_blank" ><i class="fa-brands fa-mastodon"></i>talkpython</a><br/> <strong>Michael on Bluesky</strong>: <a href="https://bsky.app/profile/mkennedy.codes?featured_on=talkpython" target="_blank" >@mkennedy.codes at bsky.app</a><br/> <strong>Michael on Mastodon</strong>: <a href="https://fosstodon.org/web/@mkennedy" target="_blank" ><i class="fa-brands fa-mastodon"></i>mkennedy</a><br/></div>

Matt Layman: Optimizing SQLite - Building SaaS #210 [Planet Python]

In this episode, when worked on the newly migrated JourneyInbox site and focused on the database. Since me moved from Postgres to SQLite, I needed to make sure that SQLite was ready for users. We examined common configuration to optimize the database and applied that config to JourneyInbox.

27-12-2024

17:47

2024 Recap: Linux & FOSS Ecosystem’s Highlights [Linux Today]

Discover the biggest Linux updates, top FOSS breakthroughs, and community-driven innovations shaping the open-source landscape in 2024.

The post 2024 Recap: Linux & FOSS Ecosystem’s Highlights appeared first on Linux Today.

How to Use awk to Perform Arithmetic Operations in Loops [Linux Today]

awk is a scripting language designed for text processing and data extraction, which reads input line by line, splits each line into fields, and allows you to perform operations on those fields. It’s commonly used for tasks like pattern matching, arithmetic calculations, and generating formatted reports.

The post How to Use awk to Perform Arithmetic Operations in Loops appeared first on Linux Today.

Armin Ronacher: Reflecting on Life [Planet Python]

Last year I decided that I want to share my most important learnings about engineering, teams and quite frankly personal mental health. My hope is that those who want to learn from me find it useful. This is a continuation to this.

Over the years, I've been asked countless times: “What advice would you give to young programmers or engineers?” For the longest time, I struggled to answer. I wasn't sure I had anything definitive or profound to offer. And truthfully, even now, I'm not convinced I have enough answers. But as I've reflected on my journey to here, I've formulated some ideas that I believe are worth sharing — if only to provide a bit of guidance to those just starting out. For better or worse, I think those things are applicable regardless of profession.

My core belief is that fulfillment and happiness comes from deliberate commitment to meaningful work, relationships, and personal growth and purpose. I don't claim that these things can be replicated, but they worked for me and some others, so maybe they can be of use for you.

Put Time In

Putting time into work and skills — and by that truly investing oneself — is always worth it.

Whether it's working on a project, solving a difficult problem, or even refining soft skills like communication, the act of showing up and putting in the hours is essential. Practice makes perfect, but more so it's all about progress rather than perfection. Each hour you spend iterating, refining, failing and retrying brings you closer to excellence. It doesn't always feel that way in the moment but when you look back at what you did before, you will see your progress. And that act of looking back, and seeing how you improved, is immensely rewarding and in turn makes you enjoy your work.

I did not start out enjoying programming, not at all. I had a friend in school who was just better than me at everything. It felt demotivating. Programming turned out to be a necessary tool that I had to build things and to share with others, and through that, I eventually ended up enjoying it.

There is a narrative that working hard is inherently bad for your health or that long hours lead to burnout. I disagree. It's not about how many hours you put in, but about the enjoyment and quality of the work you're doing. Still some of my most favorite memories were some all-nighters I did when I was younger working on something. It wasn't even necessarily on projects that ended up meaningful or successful, but it was the act in itself. When you find joy in what you're building in the moment, work does not feel like a burden. Instead it feels exciting and exhilarating. These memories, that some might describe as unhealthy are some of my most pleasant ones.

Work And The Man

The key isn't avoiding hard work but finding meaning in it. Practice and effort, when coupled with a sense of purpose, not only make you better at what you do but also make the journey itself fulfilling. There is one catch however, and that is that your payout should not just be your happiness in the moment, but it should be long lasting.

The best way to completely destroy your long term satisfaction is if the effort you are putting into something is not reciprocated or the nature of the work feels meaningless. It's an obvious privilege to recommend that one shall not work for exploitative employers but you owe yourself to get this right. With time you build trust in yourself, and the best way to put this trust to use, is to break out of exploitative relationships.

If you end up doing things you do not believe in, it will get to you. It will not just demotivate you and make you unhappy at work, it will eventually make every hour you spent miserable and eventually get to your health.

Other than sleeping, work is what you spent the most time with for a significant portion of your life. If that is not fulfilling a core pillar of what can provide happiness is not supporting you. I have seen people advocate for just not caring to fix the work aspect, instead to work less and spend more free time. I have not found that to work for me. Work needs to be fulfilling, even if work is just a few hours a day.

Dare To Commit

Life isn't about sampling everything; it’s about making deliberate choices and committing to the ones that matter. You don't need to date twenty people to find the right partner, nor do you need a network of hundred acquaintances to succeed. Similarly, you don't need to work at ten different companies to build a meaningful career. Those things can be hugely beneficial, don't get me wrong, but you can do more with less too. When you focus on taking one step at a time, choosing the best option available to you in that moment you can accomplish great things. Feel free to look to others for inspiration, but do not to compare what they have versus what you don't. Nothing good will come from that. Everyone's journey is unique, shaped by the opportunities they encounter and the paths they decide to follow. Value grows not with the breadth of options explored but with the depth of commitment to the path you've chosen.

Just as mastering a skill pays dividends, so does committing on your personal or professional journey. Even if the world around you shifts — like the rise of AI in software engineering — your experience and expertise aren't wasted. Your gained experience makes it much easier for you to adjust course and it will give you the necessary trust in yourself. It allows to leverage what you've learned in new ways. While it's true that choosing from limited options might not always lead to the “best” possible outcome, the time and effort you invest in your chosen path can often outweigh the hypothetical gains of a different choice. In many cases, mastery and fulfillment come not from chasing endless possibilities but from fully embracing the one path you're on and making it your own.

Date to Marry

To me this happened through a lucky accident but it's something I strongly believe in. I'm an agnostic, I don't hold strong religious beliefs but I do believe in the purpose of and benefits of a lasting marriage. When my wife and I met I did not think I was in a position in my life where I had interest, desire or necessity in a deep relationship, let alone to marry. We did not live in the same country when we met and we had a long distance relationship for almost a year. That kind of relationship (particularly when visa issues are involved) has one incredible benefit: you really have to commit to your relationship. It's expensive and you spend a lot of time talking and sharing intimate thoughts. It also forces you to make a concious decision if the two of you believe it's worth continuing. You don't have the option to just “test drive” it. It forces you to figure out all the hard things upfront. Career, values, ambitions, children, the whole thing. That's a very different experience to swiping right and see what comes from it.

That one year of intensive dating changed me. I started to recognize the benefits of committing to something on a much deeper level. It taught me that vulnerability and opening yourself up can be a beautiful thing. It showed me that there was a whole part to myself I did not look into. It showed me that really committing to something, opens up a whole new world of opportunity and it allowed us to really invest into our relationship.

When you commit to your partner fully you get a lot in the process. Yes, there are risks and while you're dating, you need to figure these things out. You need to know on a fundamental level that the person you're dating is going to be the one you want to be with for a lifetime. That's not easy, because no human is perfect. Yet if that is the goal, you can poke at the parts where dragons can be. Only in situations of stress and challenge will you truly find out how the other person works and if that works for you.

I have heard people talk about “going to IKEA” for a date. I think that's a brilliant idea. Imagining a life together and struggling a bit through conflict and resolution is exactly the right way to go about it.

Having Children

Very few things have so profoundly changed me as our first child.

Seeing children grow up is such a moving experience. I enjoy being with them in moments of achievements or sadness alike and I love when they surprise me in the morning with their newfound wisdom or after school with their proud achievements. It's fun to play with them, to help them learn new things and you can do things together you haven't done since your own childhood.

I'm lucky to have kids. I grew up in a society that has largely painted a pretty dark picture about having children but I do not share those views. We knew we wanted children and I'm glad we didn't wait. You can't cheat nature on this thing and at the present state of scientific development, things still are much harder if you try to have children late.

Nothing will ever be perfect. There were sleepless nights, there are the sicknesses that come in autumn with daycare and school. You need to arrange things in different ways than you were used to. You will hear a lot from parents and educators about what is is like to have children but the reality however is that I don't think it's possible to know how it is to have kids until you do. In a way you have to jump into the cold water and there is no going back.

There are some important prerequisites though, but I think differently about them now then I did before. I don't think that you need a lot of money or a stable career, but you need to have your marriage and house in order. The most important thing I learned about having children is that you first and foremost need to take care of yourself. Any stress you experience, you will pass on to your children and it will harm them in the process. This is really important. There are lots of dysfunctional households and bad parents and you should not have children if you can't take care of yourself.

Those are the important parts, but then there are superficial issues. I almost entirely opted out of reading parental advise books because I could feel how they stress me out. I found it easier to take on challenges as they arrive naturally. If you have a loving relationship with your spouse you can extend that to your children and learn how to deal with challenges calmly (or as calmly as you can). You need to be there for each other. Your children will not become more successful because you mastered breast feeding on day one or if you taught them sign language before they can talk. They will however be significantly better off if you can jump on a moment's notice to take care of your spouse or child when something goes wrong.

Our experience is unlikely to be your experience, but there are some things that are shared among parents. You grow above yourself when all the sudden become fully responsible for another human being and you can't opt out of it. It also invites you to reflect on yourself more and how you came to be the person that you are. I also don't think it makes you any less ambitious, but it changes how you define success for yourself. Your horizon opens up and it really makes you think more about the totality of your family rather than yourself.

My life isn't about perfection or constantly chasing what's next; it's about being present and committing to the things that matter. This is also what I'm passing on to my children. Whatever your journey may look like, I hope you find joy, purpose, and the courage to commit fully to it and that you found something useful in my writings.

25-12-2024

11:27

LibreOffice 24.8.1, the first minor release of the recently announced LibreOffice 24.8 family, is available for download [Press Releases Archives - The Document Foundation Blog]

The LibreOffice 24.8 family is optimised for the privacy-conscious office suite user who wants full control over the information they share

Berlin, 12 September 2024 – LibreOffice 24.8.1, the first minor release of the LibreOffice 24.8 family of the free, volunteer-supported office suite for Windows (Intel, AMD and ARM), macOS (Apple and Intel) and Linux, is available at www.libreoffice.org/download. For users who don’t need the latest features and prefer a more tested version, TDF maintains the previous LibreOffice 24.2 family, with several months of back-ported fixes. The current version is LibreOffice 24.2.6.

LibreOffice is the only software for creating documents that contain personal or confidential information that respects the privacy of the user – ensuring that the user is able to decide if and with whom to share the content they create. As such, LibreOffice is the best option for the privacy-conscious office suite user, and offers a feature set comparable to the leading product on the market.

In addition, LibreOffice offers a range of interface options to suit different user habits, from traditional to modern, and makes the most of different screen sizes by optimising the space available on the desktop to put the maximum number of features just a click or two away.

The biggest advantage over competing products is the LibreOffice Technology Engine, the single software platform on which desktop, mobile and cloud versions of LibreOffice – including those from ecosystem companies – are based. This allows LibreOffice to provide a better user experience and to produce identical and fully interoperable documents based on the two available ISO standards: the Open Document Format (ODT, ODS and ODP) and the proprietary Microsoft OOXML (DOCX, XLSX and PPTX). The latter hides a great deal of artificial complexity, which can cause problems for users who are confident that they are using a true open standard.

End users looking for support will be helped by the immediate availability of the LibreOffice 24.8 Getting Started Guide, which can be downloaded from the following link: books.libreoffice.org. In addition, they will be able to get first-level technical support from volunteers on the user mailing lists and the Ask LibreOffice website: ask.libreoffice.org.

A short video highlighting the main new features is available on YouTube and PeerTube peertube.opencloud.lu/w/ibmZUeRgnx9bPXQeYUyXTV.

Please confirm that you want to play a YouTube video. By accepting, you will be accessing content from YouTube, a service provided by an external third party.

If you accept this notice, your choice will be saved and the page will refresh.

LibreOffice for Enterprise

For enterprise-class deployments, TDF strongly recommends the LibreOffice Enterprise family of applications from ecosystem partners – for desktop, mobile and cloud – with a wide range of dedicated value-added features and other benefits such as SLAs: www.libreoffice.org/download/libreoffice-in-business/.

Every line of code developed by ecosystem companies for enterprise customers is shared with the community on the master code repository and improves the LibreOffice technology platform. Products based on LibreOffice Technology are available for all major desktop operating systems (Windows, macOS, Linux and ChromeOS), mobile platforms (Android and iOS) and the cloud.

The Document Foundation has developed a migration protocol to help companies move from proprietary office suites to LibreOffice, based on the provision of an LTS (long-term support) enterprise-optimised version of LibreOffice, plus migration consulting and training provided by certified professionals who offer value-added solutions that are consistent with proprietary offerings. Reference: www.libreoffice.org/get-help/professional-support/.

In fact, LibreOffice’s mature code base, rich feature set, strong support for open standards, excellent compatibility and LTS options from certified partners make it the ideal solution for organisations looking to regain control of their data and break free from vendor lock-in.

LibreOffice 24.8.1 availability

LibreOffice 24.8.1 is available from www.libreoffice.org/download/. Minimum requirements for proprietary operating systems are Microsoft Windows 7 SP1 (no longer supported by Microsoft) and Apple macOS 10.15. Products based on LibreOffice technology for Android and iOS are listed at www.libreoffice.org/download/android-and-ios/.

LibreOffice users, free software advocates and community members can support The Document Foundation by making a donation at www.libreoffice.org/donate.

Ubuntu Users Get Easier Access to Cutting-Edge Intel Drivers [OMG! Ubuntu!]

Canonical and Intel have announced they’re making it easier for Ubuntu users to get cutting-edge drivers for Intel’s newest discrete GPUs. The effort brings “ray tracing and improved machine learning performance” for Intel Arc B580 and B570 “Battlemage” discrete GPUs to users on Ubuntu 24.10, building on that releases’ preexisting support for Intel Core Ultra Xe2 iGPUs. “For the past decade, Ubuntu has been one of the first distributions to enable the latest Intel architectures. Building upon this strong collaboration, Intel and Canonical are excited to announce the availability of an Ubuntu graphics preview for [24.10]”, they say. Users with […]

Canonical and Intel have announced they’re making it easier for Ubuntu users to get cutting-edge drivers for Intel’s newest discrete GPUs. The effort brings “ray tracing and improved machine learning performance” for Intel Arc B580 and B570 “Battlemage” discrete GPUs to users on Ubuntu 24.10, building on that releases’ preexisting support for Intel Core Ultra Xe2 iGPUs. “For the past decade, Ubuntu has been one of the first distributions to enable the latest Intel architectures. Building upon this strong collaboration, Intel and Canonical are excited to announce the availability of an Ubuntu graphics preview for [24.10]”, they say. Users with […]

You're reading Ubuntu Users Get Easier Access to Cutting-Edge Intel Drivers, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

Daniel Roy Greenfeld: TIL: types.SimpleNamespace is a Bunch class [Planet Python]

Did you know that Python's types library has a bunch class implementation? How did I not see this before?!

PyCoder’s Weekly: Issue #661 (Dec. 24, 2024) [Planet Python]

#661 – DECEMBER 24, 2024

View in Browser »

Exploring Modern Sentiment Analysis Approaches in Python

What are the current approaches for analyzing emotions within a piece of text? Which tools and Python packages should you use for sentiment analysis? This week, Jodie Burchell, developer advocate for data science at JetBrains, returns to the show to discuss modern sentiment analysis in Python.

REAL PYTHON podcast

Topological Sort

A Directed Acyclic Graph (DAG) is a common data structure used to contain a series of related items that must have certain order or dependency. Topological sorting is used to help find where you might start processing to get in order handling of the items in a DAG.

REDOWAN DELOWAR

Essential Python Web Security

This series explores the critical security principles every Python web developer needs. The first post delves into fundamental security best practices, ranging from general principles to specific Python-related techniques.

MICHAEL FORD

Quiz: How to Remove Items From Lists in Python

In this quiz, you’ll test your understanding of removing items from lists in Python. This is a fundamental skill in Python programming, and mastering it will enable you to manipulate lists effectively.

REAL PYTHON

Articles & Tutorials

Programming Sockets in Python

In this in-depth video course, you’ll learn how to build a socket server and client with Python. By the end, you’ll understand how to use the main functions and methods in Python’s socket module to write your own networked client-server applications.

REAL PYTHON course

Python Decorators: A Super Useful Feature

Python decorators are one of Hashim’s favorite features. This post covers some examples he’s used in his projects. It includes the Prometheus Histogram Timing Decorators and OpenTelemetry (OTel) Manual Span Decorators.

HASHIM COLOMBOWALA

A Practical Example of the Pipeline Pattern in Python

“The pipeline design pattern (also known as Chain of Command pattern) is a flexible way to handle a sequence of actions, where each handler in the chain processes the input and passes it to the next handler.”

JUAN JOSÉ EXPÓSITO GONZÁLEZ

Best Shift-Left Testing Tools to Improve Your QA

The later in your development process that you discover the bug the more expensive it is. Shift-Left Testing is a collection of techniques to attempt to move bug discovery earlier in your process.

ANTONELLO ZANINI

Merging Dictionaries in Python

There are multiple ways of merging two or more dictionaries in Python. This post teaches you how to do it and how to deal with corner cases like duplicate keys.

TREY HUNNER

Django Quiz 2024

Adam runs a quiz on Django at his Django London meetup. He’s shared it so you can try it yourself. Test how much you know about your favorite web framework.

ADAM JOHNSON

Top Python Web Development Frameworks in 2025

This post compares many of the different web frameworks available for Python. It covers: Reflex, Django, Flask, Gradio, Streamlit, Dash, and FastAPI.

TOM GOTSMAN

My SQLAlchemy Cookbook

The post contains an embedded JupyterLite notebook containing a cookbook for SQLAlchemy. It focuses on the patterns you use in everyday ORM coding.

JAMIE CHANG

Django: Launch pdb When a Given SQL Query Runs

Here’s a technique for using pdb within Django through hooking specific SQL queries. This uses database instrumentation in the Django ORM.

ADAM JOHNSON

Projects & Code

Events

SPb Python Drinkup

December 26, 2024

MEETUP.COM

PyDelhi User Group Meetup

December 28, 2024

MEETUP.COM

PythOnRio Meetup

December 28, 2024

PYTHON.ORG.BR

Python Sheffield

December 31, 2024

GOOGLE.COM

STL Python

January 2, 2025

MEETUP.COM

Happy Pythoning!

This was PyCoder’s Weekly Issue #661.

View in Browser »

[ Subscribe to 🐍 PyCoder’s Weekly 💌 – Get the best Python news, articles, and tutorials delivered to your inbox once a week >> Click here to learn more ]

Django Weblog: Welcome to our new Django accessibility team members - Eli, Marijke, Saptak, Tushar [Planet Python]

Sarah Abderemane, Thibaud Colas and Tom Carrick are pleased to introduce four new members in the Django Accessibility team ❤️.

Marijke (pronounced Mah-Rye-Kuh) is a freelance web developer who creates human-friendly applications. She is based in Groningen, The Netherlands, specializing in Django, Python, HTML, SCSS, and vanilla JavaScript. She helps companies expand their existing projects, think about new features, train new developers, and improve developer team workflows. She is aDdjango contributor from the first session of Djangonaut Space program and she loves tea. You can learn more about Marijke on her website.

Eli is a full-stack developer from Uruguay who loves using Django and React. She is a Django contributor from the first session of the Djangonaut Space program

. She is passionate about good quality code, unit testing, and web accessibility. She enjoys drinking Maté (and talking about it!) and watching her football team play.Tushar is a software engineer at Canonical, based in India. He got involved on open source during his studies loving the the supportive community. Through fellowships like Major League Hacking, Tushar dove into Django and took part in Djangonaut Space. Learn more about Tushar on his personal website.

Saptak is a self-proclaimed Human Rights Centered Developer Web. He focuses on security, privacy, accessibility, localization, and other human rights associated with websites that makes websites more inclusive and usable by everyone. Learn more about Saptak on his personal website.

Listen to them talking about their work¶

Here are recent talks or podcasts from our new team members if you want to get to know them better.

Marijke Luttekes - My path to becoming a Django contributor | pyGrunn 2024

Marijke Luttekes - My path to becoming a Django contributor | pyGrunn 2024

Eli Rosselli - My step-by-step guide to becoming a Django core contributor | DjangoCon Europe 2024

Eli Rosselli - My step-by-step guide to becoming a Django core contributor | DjangoCon Europe 2024

Tushar Gupta - Djangonauts, Ready for Blast-Off | Talk Python to Me Ep.451

Tushar Gupta - Djangonauts, Ready for Blast-Off | Talk Python to Me Ep.451

Saptak S - Accessibility for the Django Community | DjangoCon Europe 2024

Saptak S - Accessibility for the Django Community | DjangoCon Europe 2024

What’s next¶

In truth, our four new accessibility team members joined the team months ago – shortly after we published our 2023 accessibility team report. Up next, a lot of the team will be present at FOSDEM 2025, organizing, volunteering, or speaking at the Inclusive Web Devroom.

Talk Python to Me: #490: Django Ninja [Planet Python]

If you're a Django developer, I'm sure you've heard so many people raving about FastAPI and Pydantic. But you really love Django and don't want to switch. Then you might want to give Django Ninja a serious look. Django Ninja is highly inspired by FastAPI, but is also deeply integrated into Django itself. We have Vitaliy Kucheryaviy the creator of Django Ninja on this show to tell us all about it.<br/> <br/> <strong>Episode sponsors</strong><br/> <br/> <a href='https://talkpython.fm/sentry'>Sentry Error Monitoring, Code TALKPYTHON</a><br> <a href='https://talkpython.fm/bluehost'>Bluehost</a><br> <a href='https://talkpython.fm/training'>Talk Python Courses</a><br/> <br/> <h2>Links from the show</h2> <div><strong>Vitaly</strong>: <a href="https://github.com/vitalik?featured_on=talkpython" target="_blank" >github.com/vitalik</a><br/> <strong>Vitaly on X</strong>: <a href="https://x.com/vital1k?featured_on=talkpython" target="_blank" >@vital1k</a><br/> <br/> <strong>Top 5 Episodes of 2024</strong>: <a href="https://talkpython.fm/blog/posts/top-talk-python-podcast-episodes-of-2024/" target="_blank" >talkpython.fm/blog/posts/top-talk-python-podcast-episodes-of-2024</a><br/> <br/> <strong>Django Ninja</strong>: <a href="https://django-ninja.dev/?featured_on=talkpython" target="_blank" >django-ninja.dev</a><br/> <strong>Motivation section we talked through</strong>: <a href="https://django-ninja.dev/motivation/?featured_on=talkpython" target="_blank" >django-ninja.dev/motivation</a><br/> <strong>LLM for Django Ninja</strong>: <a href="https://llm.django-ninja.dev/?featured_on=talkpython" target="_blank" >llm.django-ninja.dev</a><br/> <strong>Nano Django</strong>: <a href="https://github.com/vitalik/nano-django?featured_on=talkpython" target="_blank" >github.com/vitalik/nano-django</a><br/> <strong>Episode transcripts</strong>: <a href="https://talkpython.fm/episodes/transcript/490/django-ninja" target="_blank" >talkpython.fm</a><br/> <br/> <strong>--- Stay in touch with us ---</strong><br/> <strong>Subscribe to Talk Python on YouTube</strong>: <a href="https://talkpython.fm/youtube" target="_blank" >youtube.com</a><br/> <strong>Talk Python on Bluesky</strong>: <a href="https://bsky.app/profile/talkpython.fm" target="_blank" >@talkpython.fm at bsky.app</a><br/> <strong>Talk Python on Mastodon</strong>: <a href="https://fosstodon.org/web/@talkpython" target="_blank" ><i class="fa-brands fa-mastodon"></i>talkpython</a><br/> <strong>Michael on Bluesky</strong>: <a href="https://bsky.app/profile/mkennedy.codes?featured_on=talkpython" target="_blank" >@mkennedy.codes at bsky.app</a><br/> <strong>Michael on Mastodon</strong>: <a href="https://fosstodon.org/web/@mkennedy" target="_blank" ><i class="fa-brands fa-mastodon"></i>mkennedy</a><br/></div>

24-12-2024

12:23

Kdenlive Update Adds New Subtitle Tools, Effects + More [OMG! Ubuntu!]

A sizeable update to the free, open-source video editor Kdenlive is now available to download. Kdenlive 24.12 arrives stuffed like a seasonal bird with bug fixes, performance tweaks, and usability enhancements. Additionally, the editor’s developers have removed support for Qt5 so that, as of this release, it is entirely Qt6. Subtitling gets a big boost with the arrival of Advanced SubStation Alpha (ASS) subtitle support. The key benefit of these subtitles (I’ll swerve calling them ASS) is greater customisation, including things like text strokes, drop shadows, margins, and even effects like masking. A new Subtitle Manager makes editing, ordering, and […]

A sizeable update to the free, open-source video editor Kdenlive is now available to download. Kdenlive 24.12 arrives stuffed like a seasonal bird with bug fixes, performance tweaks, and usability enhancements. Additionally, the editor’s developers have removed support for Qt5 so that, as of this release, it is entirely Qt6. Subtitling gets a big boost with the arrival of Advanced SubStation Alpha (ASS) subtitle support. The key benefit of these subtitles (I’ll swerve calling them ASS) is greater customisation, including things like text strokes, drop shadows, margins, and even effects like masking. A new Subtitle Manager makes editing, ordering, and […]

You're reading Kdenlive Update Adds New Subtitle Tools, Effects + More, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

Daniel Roy Greenfeld: TIL: SequentialTaskSet for Locust [Planet Python]

SequentialTaskSet makes it so Locust tasks happen in a particular order, which ensures your simulated users are clicking around in a more human manner at a more human pace. Attribution goes to Audrey Roy Greenfeld.

You can see it in action in the now updated previous entry on the topic of Locust for load testing.

Daniel Roy Greenfeld: TIL: Making pytest use Ipython's PDB [Planet Python]

alias pdb='pytest --pdb --pdbcls=IPython.terminal.debugger:TerminalPdb'

Usage:

pdb tests/test_things::test_broken_thing

Daniel Roy Greenfeld: TIL: Fractional Indexing [Planet Python]

In the past when I've done this for web pages and various other interfaces it has been a mess. I've built ungainly sort order in numeric or alphanumeric batches. Inevitably there is a conflict, often sooner rather than later. So sorting a list of things often means updating all the elements to preserve the order in the datastore. I've learned to mark each element with a big value, but it's ugly and ungainly

Fortunately for me, going forward, I now know about Fractional Indexing.

References:

- https://www.figma.com/blog/realtime-editing-of-ordered-sequences/

- https://observablehq.com/@dgreensp/implementing-fractional-indexing

- https://github.com/httpie/fractional-indexing-python

Daniel Roy Greenfeld: TIL: Python Dictonary Merge Operator [Planet Python]

The function way

Until today I did this:

# Make first dict

num_map = {

'one': '1', 'two': '2', 'three': '3', 'four': '4',

'five': '5', 'six': '6', 'seven': '7', 'eight': '8',

'nine': '9'

}

# Add second dict

num_map.update({str(x):str(x) for x in range(1,10)})

print(num_map)

The operator way

Now thanks to Audrey Roy Greenfeld now I know I can do this:

# Make first dict while adding second dict

num_map = {

'one': '1', 'two': '2', 'three': '3', 'four': '4',

'five': '5', 'six': '6', 'seven': '7', 'eight': '8',

'nine': '9'

} | {str(x):str(x) for x in range(1,10)}

print(num_map)

Daniel Roy Greenfeld: TIL: Python's defaultdict takes a factory function [Planet Python]

I've never really paid attention to this object but maybe I should have. It takes a single argument of a callable function. If you put in Python types it sets the default value to those types. For example, if I use an int at the instantiating argument then it gives us a zero.

>>> from collections import defaultdict

>>>

>>> mydict = defaultdict(int)

>>> print(mydict['anykey'])

0

Note that defaultdict also act like regular dictionaries, in that you can set keys. So mydict['me'] = 'danny' will work as you expect it to with a standard dictionary.

It gets more interesting if we pass in a more dynamic function. In the exmaple below we use random.randint and a lambda to make the default value be a random number between 1 and 100.

>>> from random import randint

>>>

>>> random_values = defaultdict(lambda: randint(1,100))

Let's try it out!

>>> for i in range(5):

>>> print(random_values[i])

>>> print(random_values)

29

90

56

42

70

defaultdict(<function <lambda> at 0x72d292bb6de0>, {0: 29, 1: 90, 2: 56, 3: 42, 4: 70})

Attribution goes to Laksman Prasad, who pointing this out and encouraging me to closer look at defaultdict.

Daniel Roy Greenfeld: TIL: How to reset Jupyter notebook passwords [Planet Python]

jupyter notebook password

Attribution for this goes to Johno Whitaker.

Daniel Roy Greenfeld: TIL: Arity [Planet Python]

I'm excited to have learned there's a word for the count of arguments to a function/method/class: arity. Throughout my career I would have called this any of the following:

number_of_argsparam_countnumargsintArgumentCount

Thanks to Simon Willison for using it in a library or two and making me look up the word.

Daniel Roy Greenfeld: TIL: Using hx-swap-oob with FastHTML [Planet Python]

Until now I didn't use this HTMX technique, but today Audrey Roy Greenfeld and I dove in together to figure it out. Note that we use language that may not match HTMX's description, sometimes it's better to put things into our own words so we understand it better.

from fasthtml.common import *

app,rt = fast_app()

def mk_row(name, email):

return Tbody(

# Only the Tr element and its children is being

# injected, the Tbody isn't being injected

Tr(Td(name), Td(email)),

# This tells HTMX to inject this row at the end of

# the #contacts-tbody DOM element

hx_swap_oob="beforeend:#contacts-tbody",

),

@rt

def index():

return Div(

H2("Contacts"),

Table(

Thead(Tr(Th("Name"), Th("Email"))),

Tbody(

Tr(Td("Audrey"), Td("mommy@example.com")),

Tr(Td("Uma"), Td("kid@example.com")),

Tr(Td("Daniel"), Td("daddy@example.com")),

# Identifies the contacts-tbody DOM element

id="contacts-tbody",

),

),

H2("Add a Contact"),

Form(

Label("Name", Input(name="name", type="text")),

Label("Email", Input(name="email", type="email")),

Button("Save"),

hx_post="/contacts",

# Don't swap out the contact form

hx_swap='none',

# Reset the form and put focus onto the name field

hx_on__after_request="this.reset();this.name.focus();"

)

)

@rt

def contacts(name:str,email:str):

print(f"Adding {name} and {email} to table")

return mk_row(name,email)

serve()

To verify the behavior, view the rendered elements in your browser of choice before, after, and during submitting the form.

Daniel Roy Greenfeld: TIL: Autoreload for Jupyter notebooks [Planet Python]

Add these commands to the top of a notebook within a Python cell. Thanks to Jeremy Howard for the tip.

%load_ext autoreload

%autoreload 2

Daniel Roy Greenfeld: TIL: run vs source [Planet Python]

Run

A run launches a child process in a new bash within bash, so variables last only the lifetime of the command. This is why launching Python environments doesn't use run.

./list-things.sh

Source

A source is the current bash, so variables last beyond the running of a script. This is why launching Python environments use source.

source ~/.venv/bin/activate

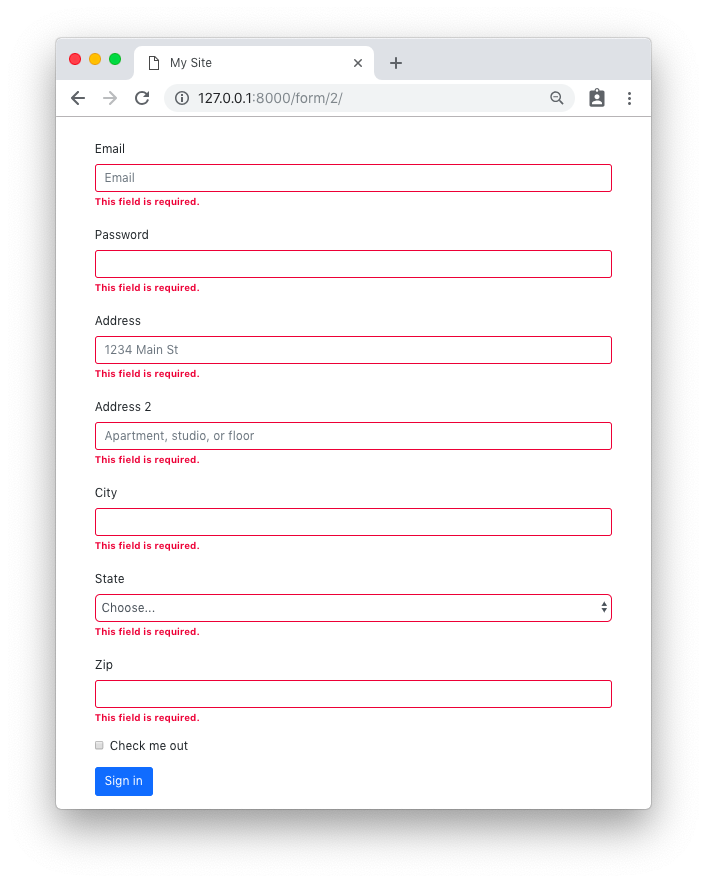

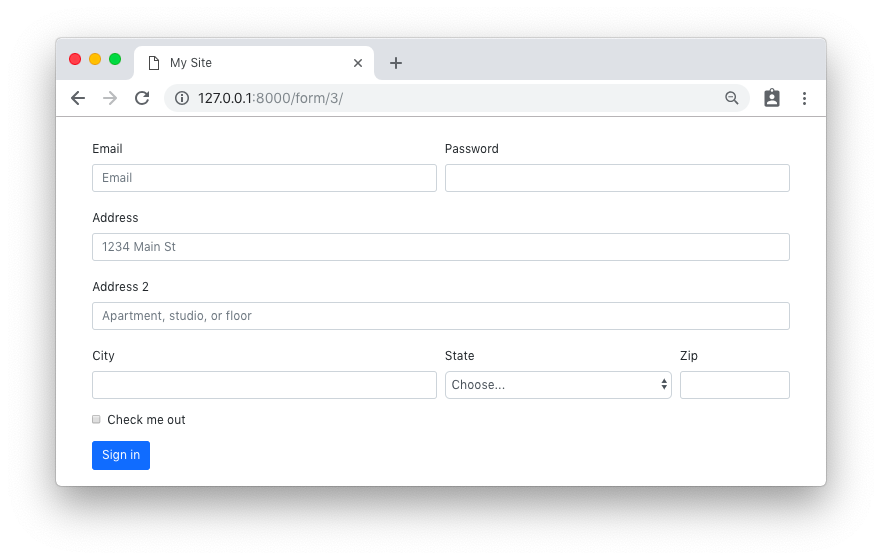

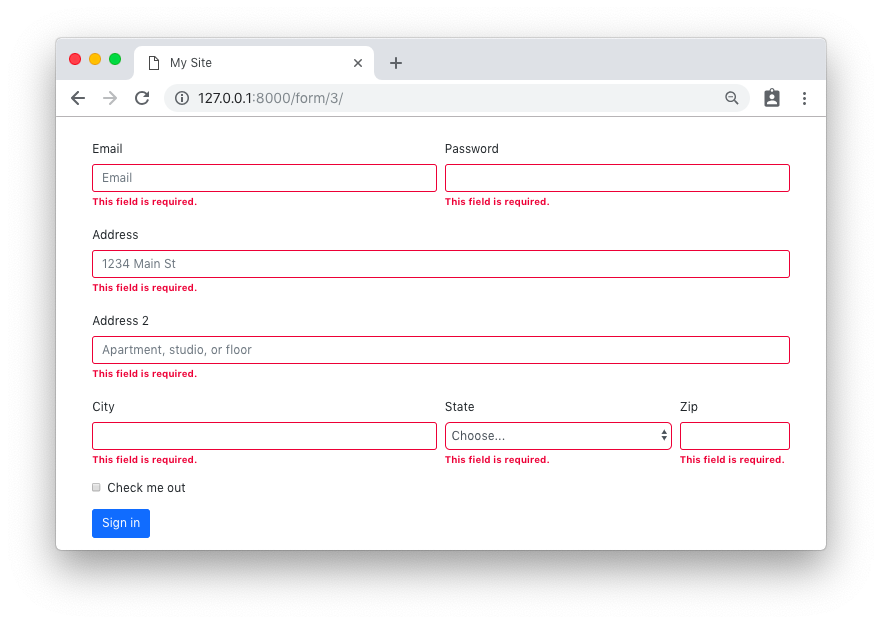

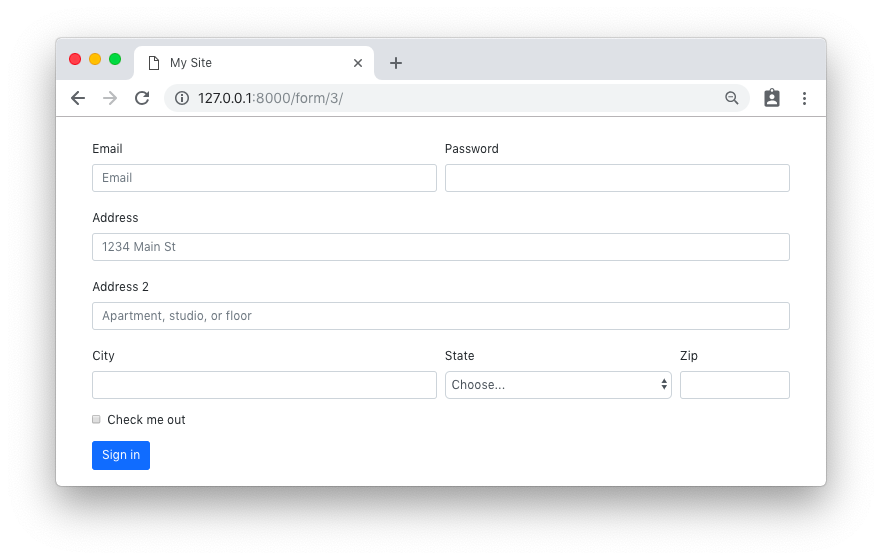

Daniel Roy Greenfeld: Using locust for load testing [Planet Python]

Locust is a Python library that makes it relatively straightforward to write Python tests. This heavily commented code example explains each section of code. To use locust:

- Install locust:

pip install locust - Copy the file below into the directory where you want to run locust

- In that directory, at the command-line, type:

locust - Open http://localhost:8089/

# locustfile.py

# For more options read the following

# - https://docs.locust.io/en/stable/writing-a-locustfile.html

# - https://docs.locust.io/en/stable/tasksets.html

# Import Locust basics

from locust import HttpUser, SequentialTaskSet, task, between

# Imports for generating content

from string import ascii_letters

from random import randint, shuffle

def namer():

"Create a random string of letters under 10 characters long"

ascii_list = list(ascii_letters)

shuffle(ascii_list)

return ''.join(ascii_list[:10])

class TaskSet(SequentialTaskSet):

"""

A class for organizing tasks, inheriting from

SequentialTaskSet means the tasks happen in order.

"""

def on_start(self):

# Methods with the on_start name will be called for each

# simulated user when they start. Useful for logins and

# other 'do before doing all other things'.

pass

def on_stop(self):

# Methods with the on_stop name will be called for each

# simulated user when they stop. Useful for logouts and

# possibly data cleanup.

pass

# TASKS!

# Methods marked with the `@task` decorator is an action

# taken by a user This example focuses on changes to a

# database, but provides a foundation for creating tests on

# a more read-focused site

@task

def index(self):

# User goes to the root of the project

self.client.get('/')

@task

def create(self):

# User posts a create form with the fields 'name'

# and 'age'

with self.client.post('/create', dict(name=namer(), age=randint(1,35))) as resp:

self.pk = resp.text

@task

def update(self):

# User posts an update form with the fields 'name'

# and 'age'"

form_data = dict(id=self.pk, name=namer(), age=randint(1,35))

self.client.post(f'/{self.pk}/update', form_data)

@task

def delete(self):

# Represents the user getting a random ID and then

# going to the delete page for it.

self.client.get(f'/{self.pk}/delete')

class CatsiteUser(HttpUser):

"""

This class represents simulated users interacting with

a website.

"""

# What tasks should be done

tasks = [TaskSet]

# how long between clicks a user should take

wait_time = between(2, 5)

# The default host of the target client. This can be changed

# at any time

host = 'http://localhost:5001/'

Sample test site

For reference, this is the test site used to create the above locustfile. I'll admit that the above test is incomplete, a lot more tasks could be added to hit web routes. To use it:

- Install FastHTML:

pip install python-fasthtml - Copy the file into the directory you want to run it

- In that directory, at the command-line, type:

python cats.py - Open http://localhost:5001/

# cats.py

from fasthtml.common import *

# Set up the database and table

db = database('cats.db')

class Cat: name:str; age:int; id:int

cats = db.create(Cat, pk='id', transform=True)

# Instantiate FastHTML app and route handler

app, rt = fast_app()

def mk_form(target: str):

return Form(

P(A('Home', href=index)),

Fieldset(

Input(name='name'),

Input(name='age', type='number'),

),

Input(type='submit', value='submit'),

method='post'

)

def cat_count():

query = """select count(id) from cat;"""

result = db.execute(query)

return result.fetchone()[0]

@rt

def index():

return Titled('Cats',

P(A('Create cat', href='/create'), NotStr(' '), A('Random ID', href=random)),

P(f'Number of cats: {cat_count()}'),

Ol(

*[Li(A(f'{d.name}:{d.age}', href=f'/{d.id}')) for d in cats()]

)

)

@rt

def random():

# Small datasets so we can get away with using the RANDOM() function here

query = """SELECT id FROM cat ORDER BY RANDOM() LIMIT 1;"""

result = db.execute(query)

return result.fetchone()[0]

@rt('/create')

def get():

return Titled('Create Cat',

mk_form('/create')

)

@rt('/create')

def post(cat: Cat):

while True:

try:

cat = cats.insert(Cat(name=cat.name, age=cat.age))

break

except Exception as e:

print(e)

raise

return cat.id

@rt('/{id}')

def cat(id: int):

cat = cats[id]

return Titled(cat.name,

P(cat.age),

P(A('update', href=f'/{id}/update')),

P(A('delete', href=f'/{id}/delete')),

)

@rt('/{id}/update')

def get(id: int):

cat = cats[id]

return Titled('Edit Cat',

fill_form(mk_form(f'/{cat.id}/update'), cat)

)

@rt('/{id}/update')

def post(cat: Cat, id: int):

if id not in cats:

return RedirectResponse(url=index)

cat.id = id

db.begin()

try:

cats.update(cat)

db.commit()

except:

db.rollback()

return RedirectResponse(url=f'/{cat.id}')

@rt('/{id}/delete')

def cat(id: int):

if id not in cats:

RedirectResponse(url=index)

# db.begin()

cats.delete(id)

# db.commit()

return RedirectResponse(url=index)

serve()

Updates

- 2024-11-08 Use

SequentialTaskSetas recommended by Audrey Roy Greenfeld - 2024-11-08 Fixed a few bugs in cats.py

Daniel Roy Greenfeld: TIL: Using Python to removing prefixes and suffixes [Planet Python]

Starting in Python 3.9, s.removeprefix() and s.removesuffix() were added as str built-ins. Which easily covers all the versions of Python I currently support.

Usage for removeprefix():

>>> 'Spam, Spam'.removeprefix('Spam')

', Spam'

>>> 'Spam, Spam'.removeprefix('This is not in the prefix')

'Spam, Spam'

Usage for removesuffix():

>>> 'Spam, Spam'.removesuffix('Spam')

'Spam, '

>>> 'Spam, Spam'.removesuffix('This is not in the suffix')

'Spam, Spam'

Juri Pakaste: New Swift Package: tui-fuzzy-finder [Planet Python]

Speaking of new Swift libraries, I released another one: tui-fuzzy-finder is a terminal UI library for Swift that provides an incremental search and selection UI that imitates the core functionality of fzf very closely.

I have a ton of scripts that wrap fzf. Some of them try to provide some kind of command line interface with options. Most of them work with pipes where I fetch data from somewhere, parse it with jq, feed it fzf, use the selection again as a part of a parameter for something else, etc. It's all great, except that I really don't love shell scripting.

With tui-fuzzy-finder I want to be able to write tools like that in a language I do actually enjoy a great deal. The package provides both a command line tool and a library, but the purpose of the command line tool is just to allow me to test the library, as writing automatic tests for terminal control is difficult. Competing with fzf in the general purpose CLI tool space is a non-goal.

I haven't implemented the preview features of fzf, nor key binding configuration. I'm not ruling either of those out, but I have not needed them yet and don't plan to work on them before a need arises.

Documentation at Swift Package Index.

23-12-2024

11:43

Mozilla Revenue Jumped in 2023, But Search Deal Cash Fell [OMG! Ubuntu!]

Mozilla’s overall revenue saw a sizeable boost in 2023, despite a drop in income from its lucrative search engine deals. According to its latest financial report, Mozilla’s revenue in 2023 hit ~$653 million (US), up from ~$593 million in 2022. The cause of the increase? Not any flashy new products, services, or deals – just ol’ fashioned interest and dividends (~$47 million) and returns on its investments (~$24 million). In fact, Mozilla’s income from search engine deals actually fell by ~$15 million in 2023. Revenue from ads, sponsored links, and its own product subscriptions (like Pocket) also dipped by ~$9 […]

Mozilla’s overall revenue saw a sizeable boost in 2023, despite a drop in income from its lucrative search engine deals. According to its latest financial report, Mozilla’s revenue in 2023 hit ~$653 million (US), up from ~$593 million in 2022. The cause of the increase? Not any flashy new products, services, or deals – just ol’ fashioned interest and dividends (~$47 million) and returns on its investments (~$24 million). In fact, Mozilla’s income from search engine deals actually fell by ~$15 million in 2023. Revenue from ads, sponsored links, and its own product subscriptions (like Pocket) also dipped by ~$9 […]

You're reading Mozilla Revenue Jumped in 2023, But Search Deal Cash Fell, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

22-12-2024

10:17

T2 Linux SDE 24.12 'Sky's the Limit!' Released With 37 ISOs For 25 CPU ISAs [Slashdot: Linux]

Berlin-based T2 Linux developer René Rebe is also long-time Slashdot reader ReneR — and popped by with a special announcement for the holidays: The T2 Linux team has unveiled T2 Linux SDE 24.12, codenamed "Sky's the Limit!", delivering a massive update for this highly portable source-based Linux distribution... With 3,280 package updates, 206 new features, and the ability to boot on systems with as little as 512MB RAM, this release further strengthens T2 Linux's position as the ultimate tool for developers working across diverse hardware and embedded systems. Some highlights from Rene's announcement: "The release includes 37 pre-compiled ISOs with Glibc, Musl, and uClibc, supporting 25 CPU architectures like ARM(64), RISCV(64), Loongarch64, SPARC(64), and vintage retro computing platforms such as M68k, Alpha, and even initial Nintendo Wii U support added." " The Cosmic Desktop, a modern Rust-based environment, debuts alongside expanded application support for non-mainstream RISC architectures, now featuring LibreOffice, OpenJDK, and QEMU." And T2sde.org gives this glimpse of the future: "While initially created for the Linux kernel, T2 already has proof-of-concept support for building 'home-brew' pkg for Other OS, including: BSDs, macOS and Haiku. Work on alternative micro kernels, such as L4, Fuchsia, RedoxOS or integrating building 'AOSP' Android is being worked on as well."

Read more of this story at Slashdot.

21-12-2024

17:55

VMware Workstation Pro Update Brings Linux Fixes [OMG! Ubuntu!]

Broadcom has released updates for VMware Workstation Pro for Windows and Linux, the first to arrive since the software became entirely free to use. Earlier this year, Broadcom made VMware Workstation Pro and its Mac equivalent Fusion Pro free for personal usage, and later for commercial usage. Anyone can download and install VMware’s desktop virtualisation software to use for whatever they want. — Assuming they have the patience to wade through rerouting links, portals, checkboxes, and dense documentation sites to locate the actual download. A blog post from a VMware team member walks through the 11 step (!) process. As […]

Broadcom has released updates for VMware Workstation Pro for Windows and Linux, the first to arrive since the software became entirely free to use. Earlier this year, Broadcom made VMware Workstation Pro and its Mac equivalent Fusion Pro free for personal usage, and later for commercial usage. Anyone can download and install VMware’s desktop virtualisation software to use for whatever they want. — Assuming they have the patience to wade through rerouting links, portals, checkboxes, and dense documentation sites to locate the actual download. A blog post from a VMware team member walks through the 11 step (!) process. As […]

You're reading VMware Workstation Pro Update Brings Linux Fixes, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

5 Compelling Reasons to Choose Linux Over Windows [Linux Journal - The Original Magazine of the Linux Community]

Introduction

In the world of operating systems, Windows has long held the lion’s share of the market. Its user-friendly interface and wide compatibility have made it the default choice for many. However, in recent years, Linux has steadily gained traction, challenging the status quo with its unique offerings. What was once considered the domain of tech enthusiasts and developers is now being embraced by businesses, governments, and everyday users alike. But why should you consider switching to Linux? Let’s dive into five compelling reasons to embrace Linux over Windows.

Reason 1: Cost-Effectiveness

One of the most striking advantages of Linux is its cost-effectiveness. Linux is free and open-source, meaning you can download, install, and use it without paying a single penny. This stands in stark contrast to Windows, which requires users to purchase a license. Additionally, enterprise versions of Windows often come with recurring fees, further inflating the cost.

Linux doesn’t just save money on the operating system itself. There are no hidden costs for updates or essential tools. For example, most Linux distributions come pre-installed with a wealth of software—from office suites to development tools—that would otherwise cost extra on Windows. Businesses, in particular, stand to save significant amounts by switching their systems to Linux, eliminating licensing fees and reducing the need for expensive proprietary software.

Reason 2: Security and Privacy

In today’s digital age, security and privacy are paramount. Linux has a stellar reputation in both areas. Its architecture is inherently secure, designed to protect against malware and unauthorized access. Unlike Windows, which is frequently targeted by hackers due to its widespread use, Linux is far less susceptible to viruses and malware. In the rare event of a security breach, the open-source community quickly patches vulnerabilities, often faster than proprietary software vendors.

Privacy is another key area where Linux shines. Unlike Windows, which has faced criticism for data collection practices, Linux respects user privacy. Most Linux distributions collect little to no data, and because the source code is open, users can audit it to ensure there are no hidden backdoors or invasive tracking mechanisms.

Reason 3: Customizability

Linux is synonymous with freedom and flexibility. Unlike Windows, where customization options are limited to surface-level changes like themes and wallpapers, Linux offers deep customization. From choosing the desktop environment to tweaking system-level configurations, Linux allows users to mold their systems to suit their exact needs.

20-12-2024

10:39

Ubuntu Adds Support for Unicode’s Newest Emoji [OMG! Ubuntu!]

A paint splatter, super-tired face, and a harp are among new emoji users of Ubuntu 22.04 and 24.04 LTS will be able to see and type after installing an update to the Noto Color Emoji font. Ubuntu, which has shipped the font by default since 2017, is preparing to release an updated version containing the 8 new emoji added as part of the Unicode 16.0 standard. Unicode 16.0 went live in September, introducing a total of 5,185 new characters, including 7 new emoji code points and 1 new emoji sequence to create the official flag of the Island of Sark. The new […]

A paint splatter, super-tired face, and a harp are among new emoji users of Ubuntu 22.04 and 24.04 LTS will be able to see and type after installing an update to the Noto Color Emoji font. Ubuntu, which has shipped the font by default since 2017, is preparing to release an updated version containing the 8 new emoji added as part of the Unicode 16.0 standard. Unicode 16.0 went live in September, introducing a total of 5,185 new characters, including 7 new emoji code points and 1 new emoji sequence to create the official flag of the Island of Sark. The new […]

You're reading Ubuntu Adds Support for Unicode’s Newest Emoji, a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

19-12-2024

21:05

LibreOffice 24.8.4, optimised for the privacy-conscious user, is available for download [Press Releases Archives - The Document Foundation Blog]

Berlin, 19 December 2024 – LibreOffice 24.8.4, the fourth minor release of the LibreOffice 24.8 family of the free open source, volunteer-supported office suite for Windows (Intel, AMD and ARM), MacOS (Apple and Intel) and Linux, is available at www.libreoffice.org/download.

The release includes over 55 bug and regression fixes over LibreOffice 24.8.3 [1] to improve the stability and robustness of the software, as well as interoperability with legacy and proprietary document formats.

LibreOffice is the only office suite that respects the privacy of the user, ensuring that the user is able to decide if and with whom to share the content they create. It even allows deleting user related info from documents. As such, LibreOffice is the best option for the privacy-conscious office suite user, while offering a feature set comparable to the leading product on the market.

Also, LibreOffice offers a range of interface options to suit different user habits, from traditional to modern, and makes the most of different screen sizes by using all the space available on the desktop to put the maximum number of features just a click or two away.

The biggest advantage over competing products is the LibreOffice Technology engine, the single software platform on which desktop, mobile and cloud versions of LibreOffice – including those from ecosystem companies – are based.

This allows LibreOffice to produce identical and fully interoperable documents based on two ISO standards: the open and neutral Open Document Format (ODT, ODS, ODP) and the closed and fully proprietary Microsoft OOXML (DOCX, XLSX, PPTX), which hides a large amount of artificial complexity, and can cause problems for users who are confident that they are using a true open standard.

End users looking for support can download the LibreOffice 24.8 Getting Started, Writer, Impress, Draw and Math guides from the following link: books.libreoffice.org/. In addition, they can get first-level technical support from volunteers on mailing lists and the Ask LibreOffice website: ask.libreoffice.org.

LibreOffice for Enterprise

For enterprise-class deployments, TDF strongly recommends the LibreOffice Enterprise family of applications from ecosystem partners, with three or five year backporting of security patches, other dedicated value-added features and Service Level Agreements: www.libreoffice.org/download/libreoffice-in-business/.

Every line of code developed by ecosystem companies for enterprise customers is shared with the community on the master code repository and improves the LibreOffice Technology platform. Products based on LibreOffice Technology are available for all major desktop operating systems (Windows, macOS, Linux and ChromeOS), mobile platforms (Android and iOS) and the cloud.

The Document Foundation’s migration protocol helps companies move from proprietary office suites to LibreOffice, by installing the LTS (long-term support) enterprise-optimised version of LibreOffice, plus consulting and training provided by certified professionals: www.libreoffice.org/get-help/professional-support/.

In fact, LibreOffice’s mature code base, rich feature set, strong support for open standards, excellent compatibility and LTS options make it the ideal solution for organisations looking to regain control of their data and break free from vendor lock-in.

LibreOffice 24.8.4 availability

LibreOffice 24.8.4 is available from www.libreoffice.org/download/. Minimum requirements for proprietary operating systems are Microsoft Windows 7 SP1 (no longer supported by Microsoft) and Apple MacOS 10.15. Products for Android and iOS are at www.libreoffice.org/download/android-and-ios/.

LibreOffice 24.8.4 is available from www.libreoffice.org/download/. Minimum requirements for proprietary operating systems are Microsoft Windows 7 SP1 (no longer supported by Microsoft) and Apple MacOS 10.15. Products for Android and iOS are at www.libreoffice.org/download/android-and-ios/.

Users of the LibreOffice 24.2 branch (the last update being 24.2.7), which has recently reached end-of-life, should consider upgrading to LibreOffice 24.8.4, as this is already the most tested version of the program. Early February will see the announcement of LibreOffice 25.2.

LibreOffice users, free software advocates and community members can support The Document Foundation by donating at www.libreoffice.org/donate.

Enterprise deploying LibreOffice can also donate, although the best solution for their needs would be to look for the enterprise optimized versions of the software (with Long Term Support for security and Service Level Agreements to protect their investment) at www.libreoffice.org/download/libreoffice-in-business/.